How Is Optical Circuit Switching Transforming Data Centers?

2025-10-25

As cloud computing, AI, and high-performance workloads continue to reshape digital infrastructure, the demand for higher bandwidth and lower latency inside data centers has never been greater. Traditional packet-switched architectures, while mature, are increasingly challenged by massive east–west traffic patterns.

Optical Circuit Switching (OCS) — a technology that establishes direct, all-optical paths between network endpoints — is emerging as a practical solution. By reducing optical–electrical–optical conversions and enabling deterministic data transmission, OCS enhances throughput, scalability, and energy efficiency in large-scale data centers.

Optical Circuit Switching refers to creating a dedicated optical circuit (a continuous light path) between two network endpoints so that traffic traverses entirely in the optical domain for the duration of the circuit. An optical circuit switch is the physical device that establishes and tears down these optical connections between fiber ports. Because OCS routes traffic without performing per-packet electronic processing, it is protocol agnostic and can carry any line-rate optical signal placed on the endpoint fibers.

Several hardware approaches are used to implement OCS; each has tradeoffs in port count, insertion loss, reliability, and switching speed:

- 3D MEMS (micro-mirror) matrix switches: commercial, high-port-count MEMS switches physically redirect beams between fibers using arrays of tiny mirrors. They scale to hundreds of ports and are widely used for OCS deployments in production environments. MEMS OCSs are relatively mature and field-proven.

- Wavelength-selective switches (WSS) (including LCoS-based WSS): these operate at the wavelength level inside a WDM channel, enabling per-wavelength routing inside a fiber. WSS devices are valuable where wavelength granularity (rather than full fiber port switching) is needed. LCoS implementations are common for flexible wavelength routing.

- Other approaches: optical MEMS variants, opto-mechanical patching, and emerging integrated silicon-photonic OCS designs each target specific points on the scalability / speed / cost tradeoff curve. Research and product activity continue to broaden choices.

Why OCS Matters for Data Centers ?

OCS delivers measurable benefits for certain classes of traffic and workloads, but those benefits must be framed with the technology’s limits in mind:

1. Higher sustained throughput for large flows. For bulk transfers or large synchronized exchanges (e.g., distributed ML model synchronization, large dataset shuffles), a dedicated optical circuit avoids repeated O-E-O conversion and can deliver full line-rate throughput between endpoints. This is one of OCS’s primary operational advantages.

2. Lower energy per bit for routed elephant flows. By avoiding intermediate electronic switching and buffering for the flows that use the circuit, OCS can reduce energy consumption per bit for those flows. Vendor white papers and independent analyses report significant energy and cost advantages when OCS is used to carry large-volume, predictable flows. (That said, realized savings depend on how much traffic is carried over circuits versus via electronic packet switches.)

3. Predictable latency for the through-path. The in-flight optical path introduces negligible additional propagation delay relative to the fiber’s physical latency; thus, once an optical circuit is established the path adds very little per-bit processing delay.

These strengths make OCS well suited to elephant-flow optimization (large, long-lived transfers), HPC interconnects, and large-scale ML workloads where predictable, sustained bandwidth is essential.

How OCS is Typically Deployed ?

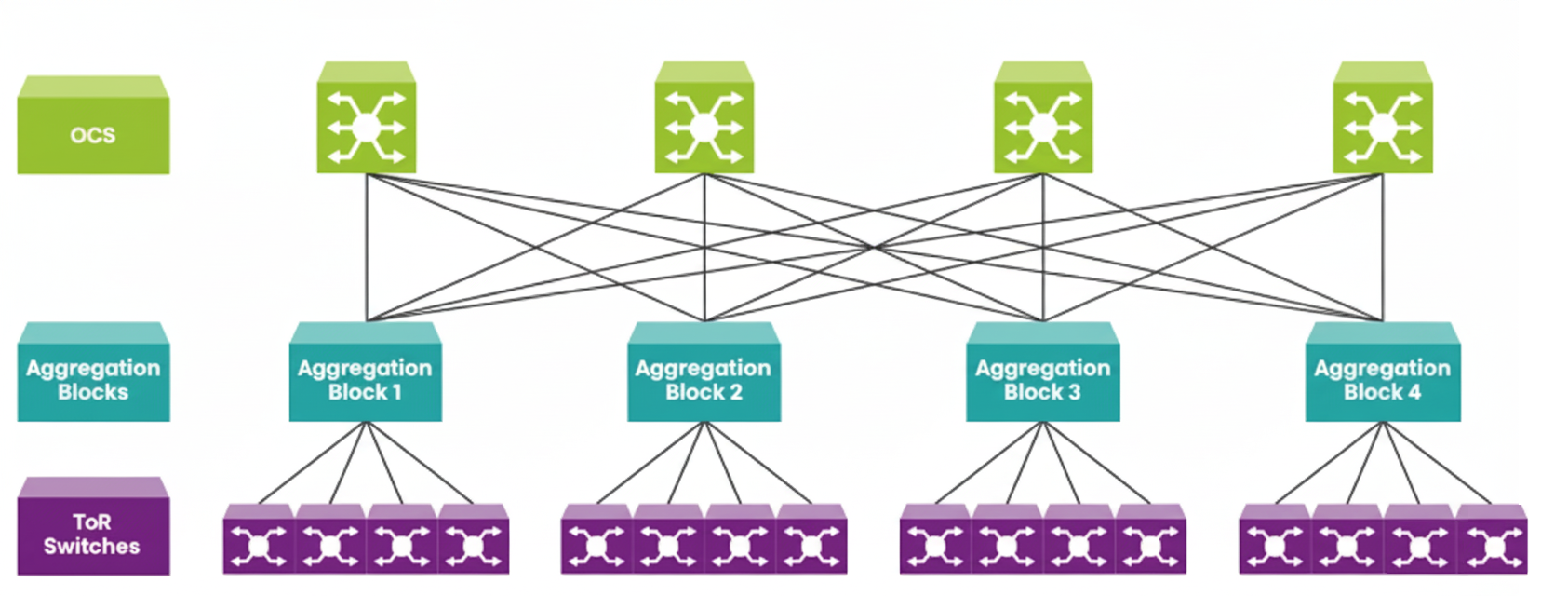

Practical adoption patterns in data centers favor hybrid architectures where OCS coexists with conventional electronic packet switching:

1. Packet layer (electrical) continues to handle latency-sensitive, short, bursty flows and general packet routing and forwarding functions.

2. OCS layer (optical) is used to provision circuits for known or detected large flows between racks/clusters.

3. Controller / Orchestration plane (often SDN-based, sometimes with workload-aware or ML-assisted scheduling) monitors traffic and dynamically provisions circuits when the expected traffic lifetime and volume make it efficient to do so.

This hybrid approach leverages OCS for sustained heavy flows while preserving the flexibility of packet switching for everything else. Several academic and industry designs (and production proofs of concept at hyperscalers) follow this hybrid model.

Typical Use Cases

1. Distributed machine learning and AI training: Large model-parallel or data-parallel jobs that require frequent, sustained synchronization between GPU clusters benefit from all-optical links that provide consistent aggregate bandwidth.

2. High-performance computing (HPC): HPC clusters with deterministic communication patterns can use OCS to simplify topologies and reduce software-level variability in communication times.

3. Bulk backup, replication, and offline data transfers: Scheduled replication jobs or planned bulk migrations are natural candidates for circuits that can be provisioned for the duration of the transfer.

Operational & Integration Challenges

1. Control plane complexity and scheduling overhead. Effective use of OCS requires good traffic measurement, prediction, and a scheduler that can make provisioning decisions fast enough to justify circuit creation. Some scheduling algorithms can introduce additional latency if not carefully engineered.

2. Interoperability with legacy packet fabrics and management systems. Deployments need well-defined northbound APIs and integration with orchestration systems to avoid operational friction.

3. Granularity mismatch for mice flows. Because reconfiguration is relatively slow, using OCS for short, bursty flows can harm utilization; hybrid designs are the standard mitigation.

OCS is a pragmatic, field-tested technology for reducing O-E-O conversions, lowering energy per bit for large flows, and providing very high sustained bandwidth between endpoints. However, its practical value depends on workload composition and system design: OCS is most effective when used selectively for long-lived, high-volume flows and controlled via an orchestration layer that keeps the packet fabric for short, latency-sensitive traffic. Data centers pursuing OCS should plan for orchestration complexity, account for switch reconfiguration times in the tens to hundreds of milliseconds for many devices, and evaluate ROI against their specific traffic profile. When those conditions are met, OCS is a powerful component in a hybrid fabric that improves overall bandwidth efficiency and energy characteristics for large-scale, data-intensive operations.